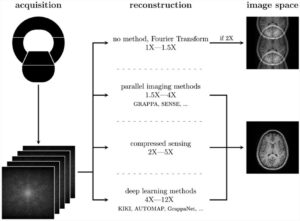

Radiomics studies often perform a feature selection step to remove redundant and irrelevant features from the generic features extracted from radiological images. However, one must take care if feature selection is used together with cross-validation. In this case, the feature selection must be applied to each fold separately. If it is applied beforehand on all data as a preprocessing step, data leakage can occur, resulting in a positive bias.

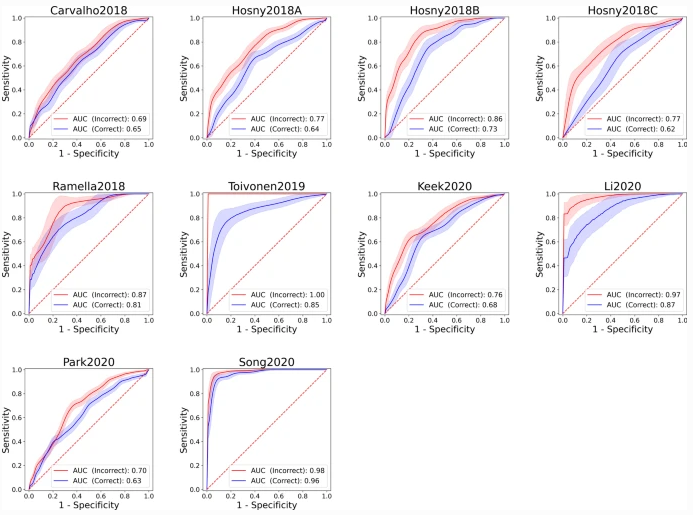

In this paper, we measure the extent of the bias in terms of AUC-ROC on 10 publicly available radiomics datasets. Our results show that a large positive bias can occur when the feature selection is incorrectly applied – up to 0.15 in AUC-ROC. Therefore, care must be taken to avoid bias by incorrectly applying cross-validation when no external data is used.

We estimate that approximately 10-15% of all radiomics studies using only cross-validation misapply feature selection, further exacerbating the reproducibility problem in radiomics. We hope that our result helps to prevent this misapplication.

Key points

- Incorrectly applying feature selection on the whole dataset before cross-validation can cause a large positive bias.

- Datasets with higher dimensionality, i.e., more features per sample, are more prone to positive bias.

Authors: Aydin Demircioğlu